TOC

一 mutation操作

ClickHouse提供了Update和Delete两个mutation操作,但是修改的代价比较大,因为ClickHouse使用类似于LSM的底层存储结构,文件是不可修改、不可变的,新操作只会写到新文件中。因此哪怕只更新一条记录,也需要重新生成一个新的数据片段,会将该记录修改后的内容以及该记录所在的分区中其他未修改的所有记录写入到新的数据片段。因此mutation操作最好是批量操作,涉及的分区数尽量少,这样性能会比较高。

二 mutation操作测试

1 初始化

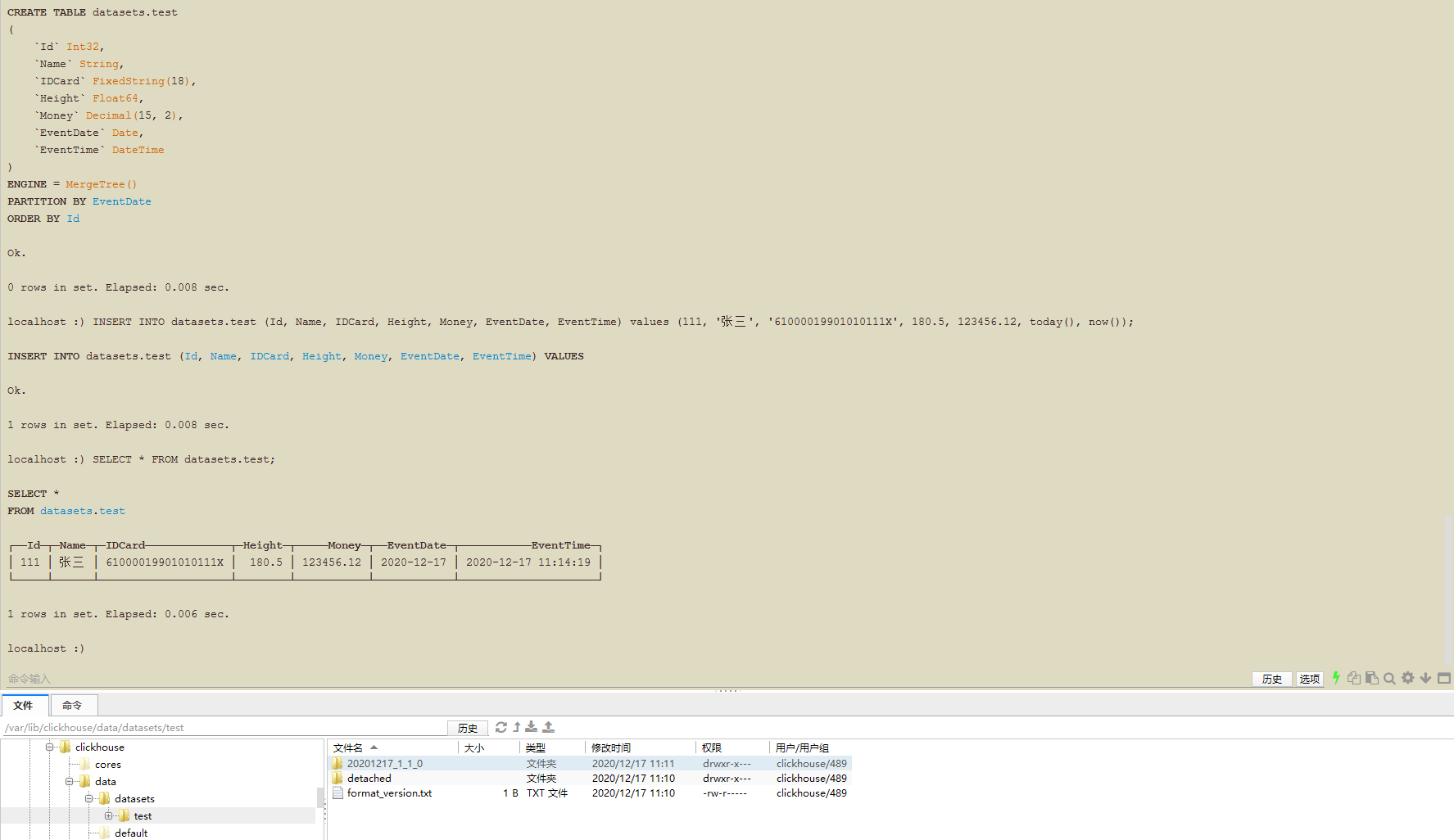

CREATE TABLE datasets.test

(

`Id` Int32,

`Name` String,

`IDCard` FixedString(18),

`Height` Float64,

`Money` Decimal(15, 2),

`EventDate` Date,

`EventTime` DateTime

)

ENGINE = MergeTree()

PARTITION BY EventDate

ORDER BY Id;

INSERT INTO datasets.test (Id, Name, IDCard, Height, Money, EventDate, EventTime) values (111, '张三', '61000019901010111X', 180.5, 123456.12, today(), now());

创建表并写入一条数据,我们可以看到数据写入成功,并且生成一个数据片段20201217_1_1_0。

2 执行Update操作

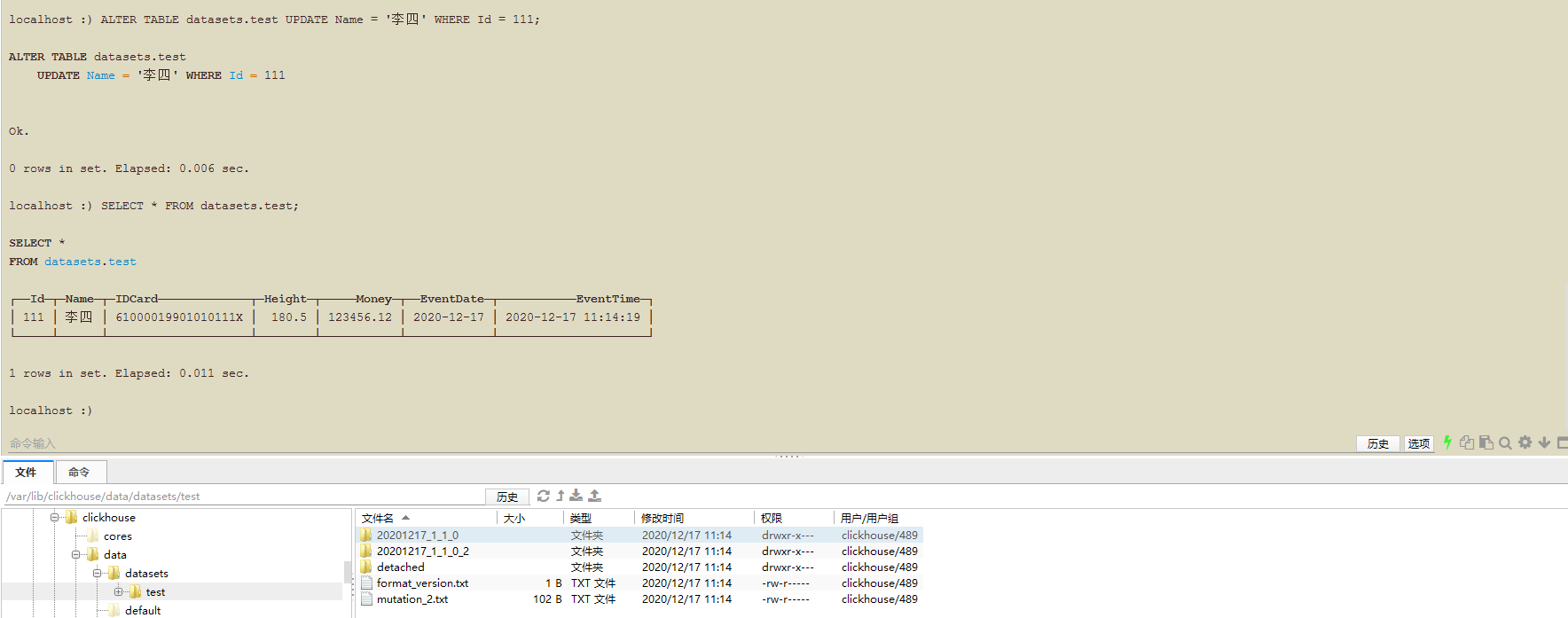

ALTER TABLE datasets.test UPDATE Name = '李四' WHERE Id = 111;

执行ALTER TABLE ... UPDATE ...命令,然后查询,可以看到结果Name字段的值已经更新。

并且生成了mutation_2.txt文件和新的数据片段20201217_1_1_0_2。

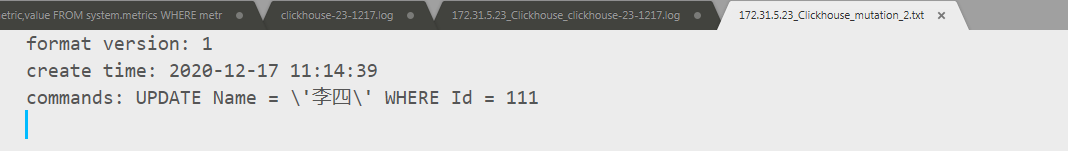

mutation_2.txt中存储了mutation命令。

SELECT

partition,

name,

active,

path

FROM system.parts

WHERE (database = 'datasets') AND (table = 'test');

旧的数据片段和新的数据片段同时存在,但是并不会出现查询结果不正常的情况,因为在元数据内会把旧的数据片段标记为未激活状态,也就是说查询时会排除。

同时后台线程会定期扫描active为0的数据片段并删除。

接下来查看日志详细操作。可以看到线程171受理了客户端请求,把修改命令写入mutation_2.txt文件,并在system.mutations表插入记录后后就向客户端返回。

线程13执行mutation内容,执行时会先根据WHERE条件进行查询,然后把修改后的内容以及未修改的内容写入到临时数据片段tmp_mut_20201217_1_1_0_2,整个操作执行完成后进行rename操作,把数据片段文件名修改为20201217_1_1_0_2,该操作与Insert操作步骤相同。

2020.12.17 11:14:39.761853 [ 171 ] {0469ecd3-99d8-456c-bb65-9decf36bdf27} <Debug> executeQuery: (from [::1]:40442) ALTER TABLE datasets.test UPDATE Name = '李四' WHERE Id = 111

2020.12.17 11:14:39.765254 [ 171 ] {0469ecd3-99d8-456c-bb65-9decf36bdf27} <Information> datasets.test: Added mutation: mutation_2.txt

2020.12.17 11:14:39.765488 [ 171 ] {0469ecd3-99d8-456c-bb65-9decf36bdf27} <Debug> MemoryTracker: Peak memory usage (for query): 0.00 B.

2020.12.17 11:14:39.765652 [ 171 ] {} <Debug> MemoryTracker: Peak memory usage (total): 0.00 B.

2020.12.17 11:14:39.765744 [ 171 ] {} <Information> TCPHandler: Processed in 0.004 sec.

2020.12.17 11:14:39.767023 [ 13 ] {} <Debug> DiskLocal: Reserving 1.00 MiB on disk `default`, having unreserved 167.87 GiB.

2020.12.17 11:14:39.767787 [ 13 ] {} <Debug> datasets.test (SelectExecutor): Key condition: (column 0 in [111, 111])

2020.12.17 11:14:39.767899 [ 13 ] {} <Debug> datasets.test (SelectExecutor): MinMax index condition: unknown

2020.12.17 11:14:39.767976 [ 13 ] {} <Debug> datasets.test (SelectExecutor): Selected 1 parts by date, 1 parts by key, 1 marks to read from 1 ranges

2020.12.17 11:14:39.768063 [ 13 ] {} <Trace> MergeTreeSelectProcessor: Reading 1 ranges from part 20201217_1_1_0, approx. 8192 rows starting from 0

2020.12.17 11:14:39.768159 [ 13 ] {} <Trace> InterpreterSelectQuery: FetchColumns -> Complete

2020.12.17 11:14:39.768318 [ 13 ] {} <Trace> Aggregator: Aggregating

2020.12.17 11:14:39.768611 [ 13 ] {} <Trace> Aggregator: Aggregation method: without_key

2020.12.17 11:14:39.768795 [ 13 ] {} <Trace> Aggregator: Aggregated. 1 to 1 rows (from 0.000 MiB) in 0.000 sec. (2565.938 rows/sec., 0.022 MiB/sec.)

2020.12.17 11:14:39.768875 [ 13 ] {} <Trace> Aggregator: Merging aggregated data

2020.12.17 11:14:39.768991 [ 13 ] {} <Trace> virtual DB::MergingAndConvertingBlockInputStream::~MergingAndConvertingBlockInputStream(): Waiting for threads to finish

2020.12.17 11:14:39.769100 [ 13 ] {} <Trace> datasets.test (MergerMutator): Mutating part 20201217_1_1_0 to mutation version 2

2020.12.17 11:14:39.770461 [ 13 ] {} <Debug> datasets.test (SelectExecutor): Key condition: unknown

2020.12.17 11:14:39.770538 [ 13 ] {} <Debug> datasets.test (SelectExecutor): MinMax index condition: unknown

2020.12.17 11:14:39.770583 [ 13 ] {} <Debug> datasets.test (SelectExecutor): Selected 1 parts by date, 1 parts by key, 1 marks to read from 1 ranges

2020.12.17 11:14:39.770762 [ 13 ] {} <Trace> MergeTreeSelectProcessor: Reading 1 ranges from part 20201217_1_1_0, approx. 8192 rows starting from 0

2020.12.17 11:14:39.770856 [ 13 ] {} <Trace> InterpreterSelectQuery: FetchColumns -> Complete

2020.12.17 11:14:39.773027 [ 13 ] {} <Trace> datasets.test: Renaming temporary part tmp_mut_20201217_1_1_0_2 to 20201217_1_1_0_2.

线程14发现旧的数据片段并进行移除。

2020.12.17 11:23:40.846878 [ 14 ] {} <Trace> datasets.test: Found 1 old parts to remove.

2020.12.17 11:23:40.846963 [ 14 ] {} <Debug> datasets.test: Removing part from filesystem 20201217_1_1_0.

3 执行Delete操作

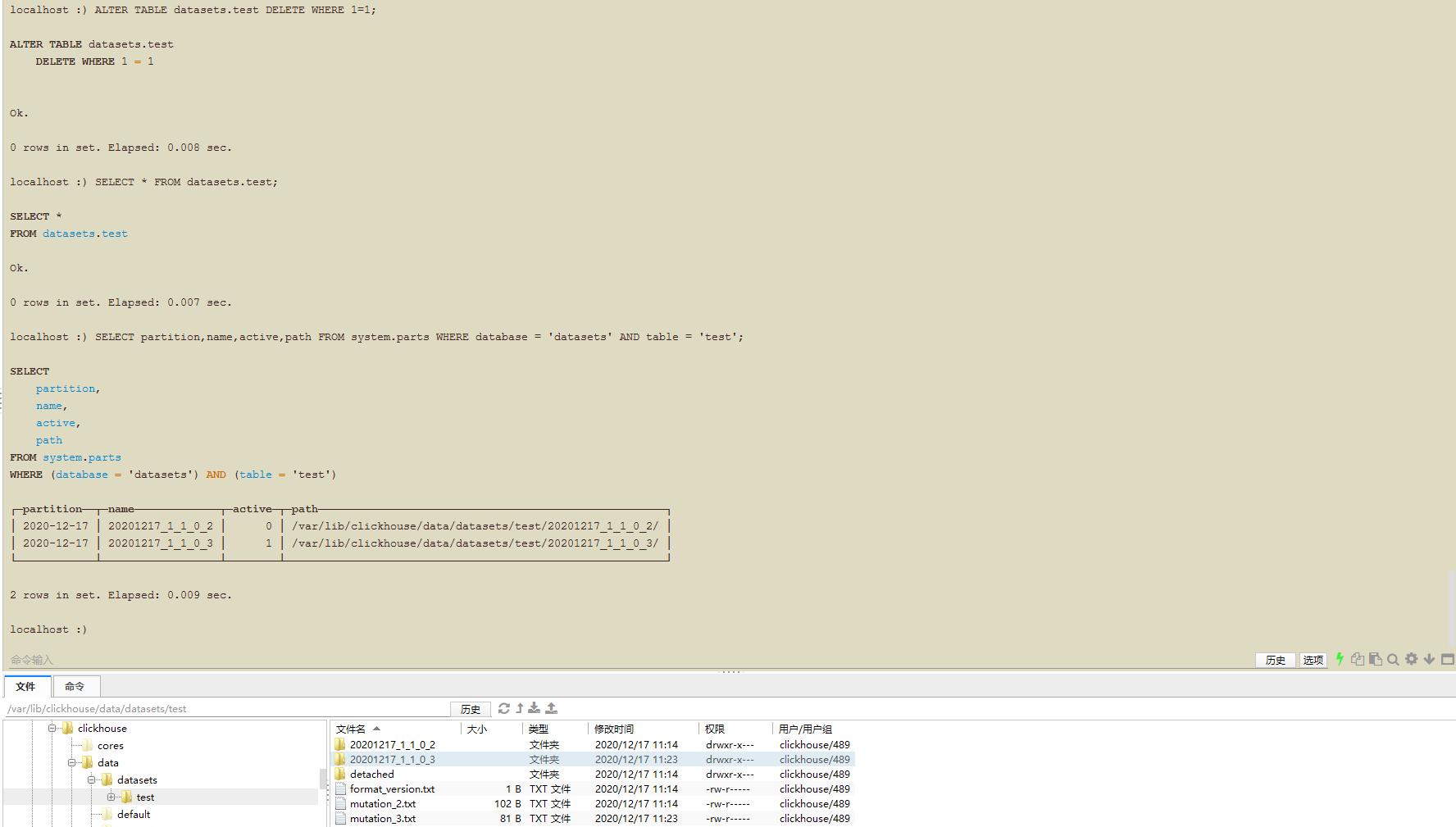

ALTER TABLE datasets.test

DELETE WHERE 1 = 1

Delete操作与Update操作整体流程类似,唯一不同的就是数据内容,Update操作新生成的数据片段包括原来数据片段未修改的内容以及修改后的内容,Delete操作新生成的数据片段只有删除后的内容。

2020.12.17 11:23:58.391321 [ 171 ] {73aa9164-54e2-4823-bf06-5550e01ae6f7} <Debug> executeQuery: (from [::1]:40442) ALTER TABLE datasets.test DELETE WHERE 1 = 1

2020.12.17 11:23:58.397139 [ 171 ] {73aa9164-54e2-4823-bf06-5550e01ae6f7} <Information> datasets.test: Added mutation: mutation_3.txt

2020.12.17 11:23:58.397395 [ 171 ] {73aa9164-54e2-4823-bf06-5550e01ae6f7} <Debug> MemoryTracker: Peak memory usage (for query): 0.00 B.

2020.12.17 11:23:58.397612 [ 171 ] {} <Debug> MemoryTracker: Peak memory usage (total): 0.00 B.

2020.12.17 11:23:58.397695 [ 171 ] {} <Information> TCPHandler: Processed in 0.007 sec.

2020.12.17 11:23:58.399182 [ 16 ] {} <Debug> DiskLocal: Reserving 1.00 MiB on disk `default`, having unreserved 167.88 GiB.

2020.12.17 11:23:58.400007 [ 16 ] {} <Debug> datasets.test (SelectExecutor): Key condition: unknown

2020.12.17 11:23:58.400084 [ 16 ] {} <Debug> datasets.test (SelectExecutor): MinMax index condition: unknown

2020.12.17 11:23:58.400170 [ 16 ] {} <Debug> datasets.test (SelectExecutor): Selected 1 parts by date, 1 parts by key, 1 marks to read from 1 ranges

2020.12.17 11:23:58.400256 [ 16 ] {} <Trace> MergeTreeSelectProcessor: Reading 1 ranges from part 20201217_1_1_0_2, approx. 8192 rows starting from 0

2020.12.17 11:23:58.400353 [ 16 ] {} <Trace> InterpreterSelectQuery: FetchColumns -> Complete

2020.12.17 11:23:58.400484 [ 16 ] {} <Trace> Aggregator: Aggregating

2020.12.17 11:23:58.400788 [ 16 ] {} <Trace> Aggregator: Aggregation method: without_key

2020.12.17 11:23:58.400900 [ 16 ] {} <Trace> Aggregator: Aggregated. 1 to 1 rows (from 0.000 MiB) in 0.000 sec. (3096.301 rows/sec., 0.006 MiB/sec.)

2020.12.17 11:23:58.400968 [ 16 ] {} <Trace> Aggregator: Merging aggregated data

2020.12.17 11:23:58.401045 [ 16 ] {} <Trace> virtual DB::MergingAndConvertingBlockInputStream::~MergingAndConvertingBlockInputStream(): Waiting for threads to finish

2020.12.17 11:23:58.401167 [ 16 ] {} <Trace> datasets.test (MergerMutator): Mutating part 20201217_1_1_0_2 to mutation version 3

2020.12.17 11:23:58.402338 [ 16 ] {} <Debug> datasets.test (SelectExecutor): Key condition: false

2020.12.17 11:23:58.402413 [ 16 ] {} <Debug> datasets.test (SelectExecutor): MinMax index condition: false

2020.12.17 11:23:58.402457 [ 16 ] {} <Debug> datasets.test (SelectExecutor): Selected 0 parts by date, 0 parts by key, 0 marks to read from 0 ranges

2020.12.17 11:23:58.402539 [ 16 ] {} <Trace> InterpreterSelectQuery: FetchColumns -> Complete

2020.12.17 11:23:58.404113 [ 16 ] {} <Trace> datasets.test: Renaming temporary part tmp_mut_20201217_1_1_0_3 to 20201217_1_1_0_3.

4 执行阶段查看

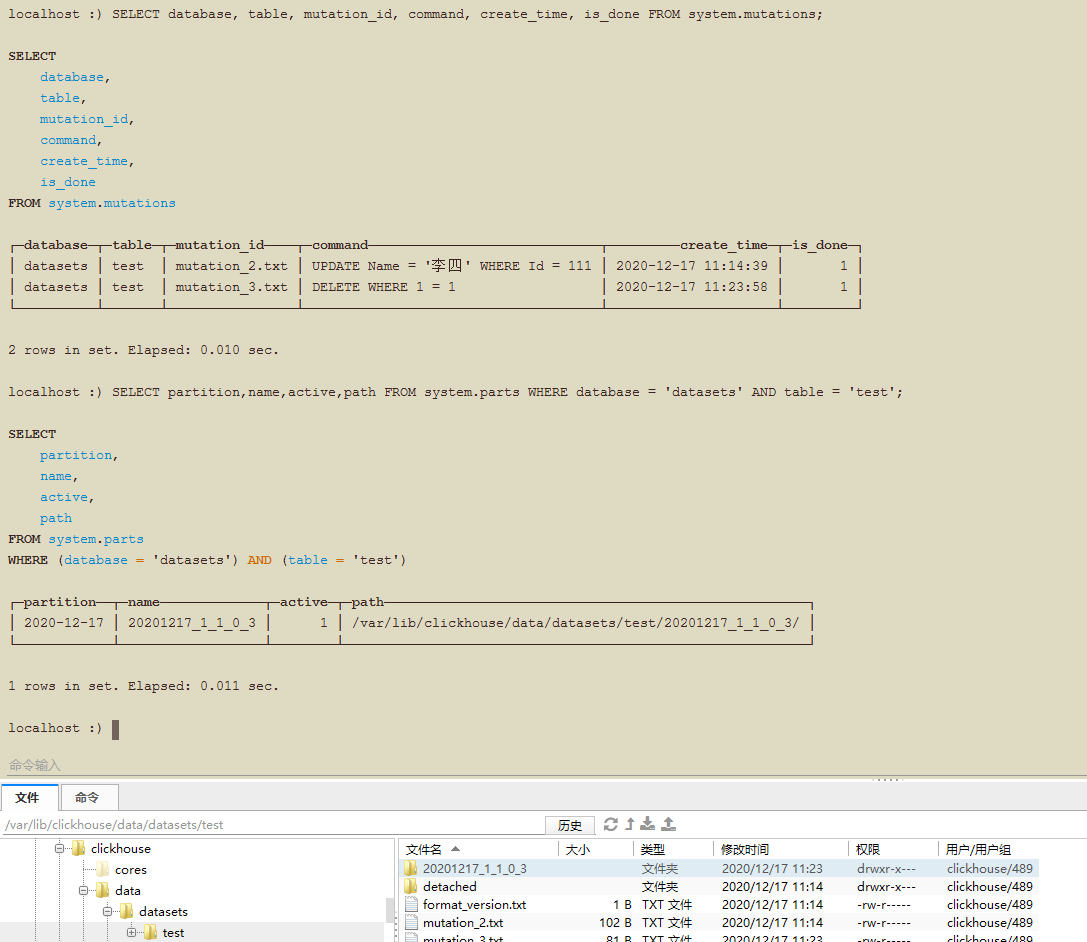

由于是受理请求,执行mutation操作,移除旧的数据片段这些都是异步操作,因此可以通过系统表的中数据来判断到达哪一个阶段。当mutation操作执行完成后,system.mutations表中对应的mutation记录中is_done字段的值会变为1。当旧的数据片段移除后,system.parts表中旧数据片段对应的记录会被移除。

「如果这篇文章对你有用,请支持一下哦」

如果这篇文章对你有用,请支持一下哦

使用微信扫描二维码完成支付