TOC

一 环境

1.1 集群节点

集群shard_replica_cluster_1包括4个Clickhouse节点,1个ZooKeeper节点。集群中只有1个数据库datasets,数据库中只有1张表hit_v1,数据800多万,来源于官方测试数据集。集群机器如下。

| 节点 | IP | CPU | Memory | Disk |

|---|---|---|---|---|

| Clickhouse node1 | 172.31.5.21 | 32 | 32 | 500G |

| Clickhouse node2 | 172.31.5.22 | 32 | 32 | 500G |

| Clickhouse node3 | 172.31.5.23 | 32 | 32 | 500G |

| Clickhouse node4 | 172.31.5.24 | 32 | 32 | 500G |

| ZooKeeper | 172.31.5.30 | 16 | 16 | 100G |

集群配置如下:

<clickhouse_remote_servers>

<shard_replica_cluster_1>

<shard>

<weight>1</weight>

<internal_replication>true</internal_replication>

<replica>

<host>172.31.5.21</host>

<port>9000</port>

</replica>

<replica>

<host>172.31.5.22</host>

<port>9000</port>

</replica>

</shard>

<shard>

<weight>1</weight>

<internal_replication>true</internal_replication>

<replica>

<host>172.31.5.23</host>

<port>9000</port>

</replica>

<replica>

<host>172.31.5.24</host>

<port>9000</port>

</replica>

</shard>

</shard_replica_cluster_1>

</clickhouse_remote_servers>

Clickhouse是2分片1副本,5.21是分片1,5.22是分片1的副本;5.23是分片2,5.24是分片2的副本。

二 缩容

2.1 下线副本

副本节点下线,主副本仍然保留相同的数据,因此不需要对副本节点数据进行备份。同时由于主副本和副本间是对等的,因此下线主副本和副本原理相同,下线主副本后,副本分片会选举成为主副本提供服务。

以下线shard_replica_cluster_1集群分片1副本 172.31.5.22 节点为例。首先备份5.21,5.23-24节点的metrika.xml配置文件,然后依次修改配置文件。

[root@localhost /]# cp /etc/clickhouse-server/metrika.xml /etc/clickhouse-server/metrika.xml.bak

[root@localhost /]# vim /etc/clickhouse-server/metrika.xml

从集群配置中移除分片1的副本配置,修改成如下配置。

<clickhouse_remote_servers>

<shard_replica_cluster_1>

<shard>

<weight>1</weight>

<internal_replication>true</internal_replication>

<replica>

<host>172.31.5.21</host>

<port>9000</port>

</replica>

</shard>

<shard>

<weight>1</weight>

<internal_replication>true</internal_replication>

<replica>

<host>172.31.5.23</host>

<port>9000</port>

</replica>

<replica>

<host>172.31.5.24</host>

<port>9000</port>

</replica>

</shard>

</shard_replica_cluster_1>

</clickhouse_remote_servers>

修改完成后重启5.21,5.23-24节点,关闭5.22节点

# 重启节点

service clickhouse-server restart

# 关闭节点

service clickhouse-server stop

登录客户端查看集群,可以发现集群已经变成3个节点。

SELECT * FROM system.clusters;

2.2 下线分片

下线分片是指整个分片的全部数据下线,假设分片1有3个节点,那么下线分片1需要将主副本以及2个副本全部下线。分片下线后将不能提供服务,因此分片的数据需要迁移至其他节点。数据迁移可以参考数据备份章节的方法,下面以FETCH备份为例。

以下线shard_replica_cluster_1集群分片2为例,分片2有1个副本,需要下线5.23-24节点。

首先迁移分片2的数据至其他节点,将5.23的数据迁移到5.21。

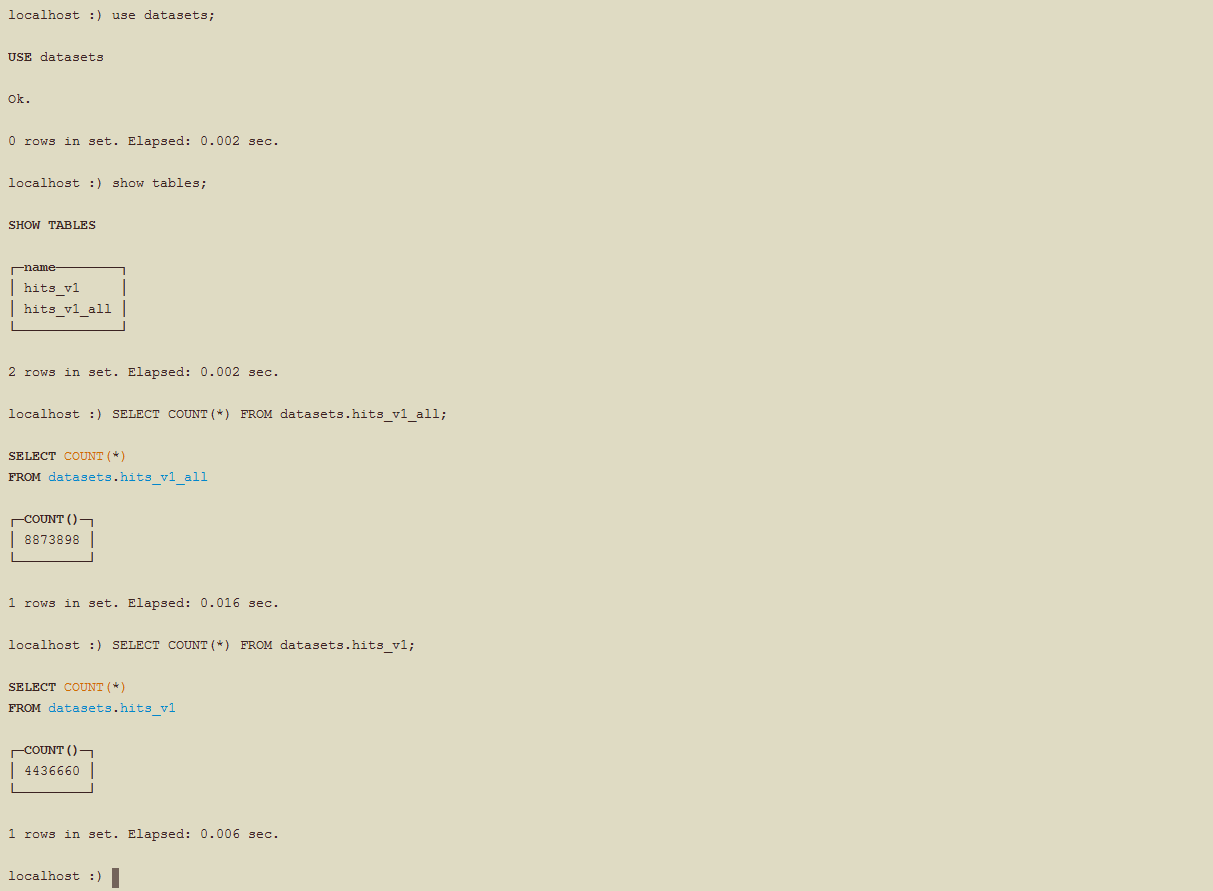

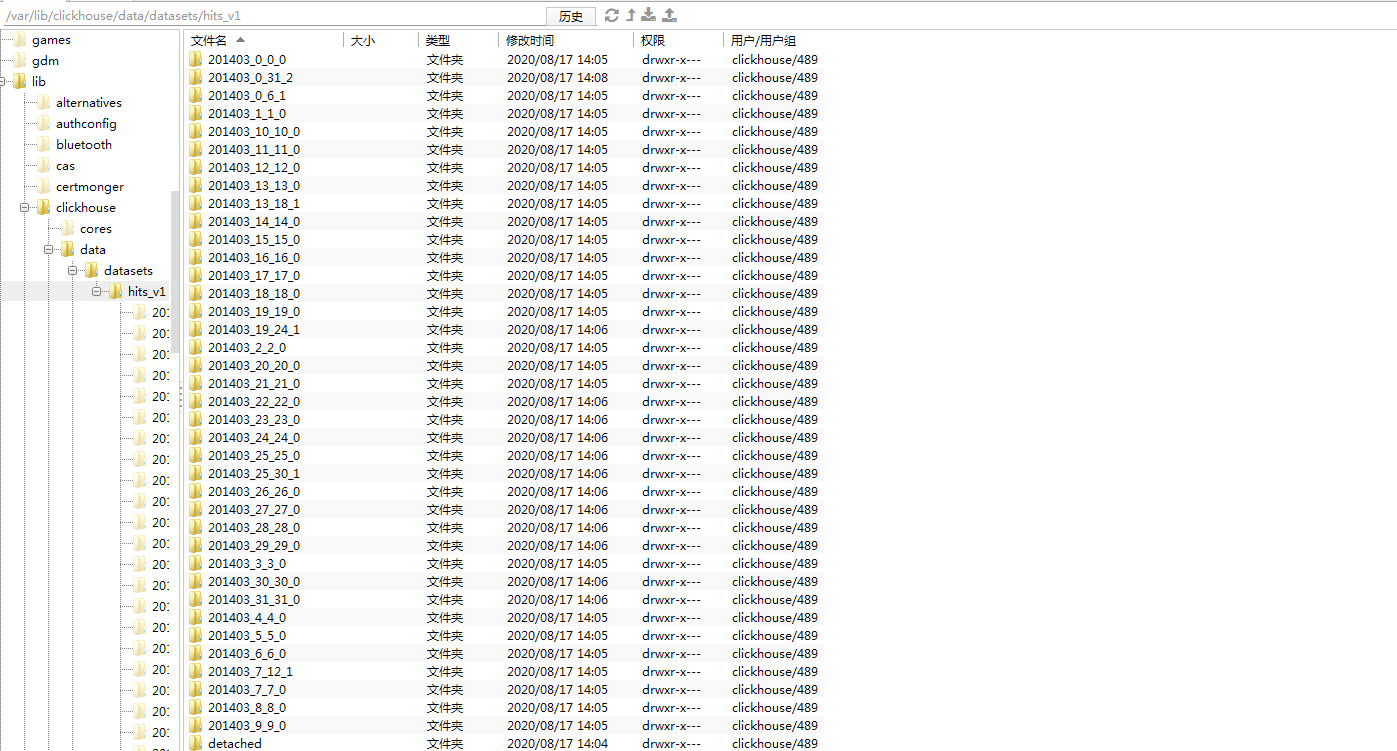

可以看到5.23节点中有hits_v1表数据,共8873898条记录,该节点数据4436660条。分片中只有1个分区201403。

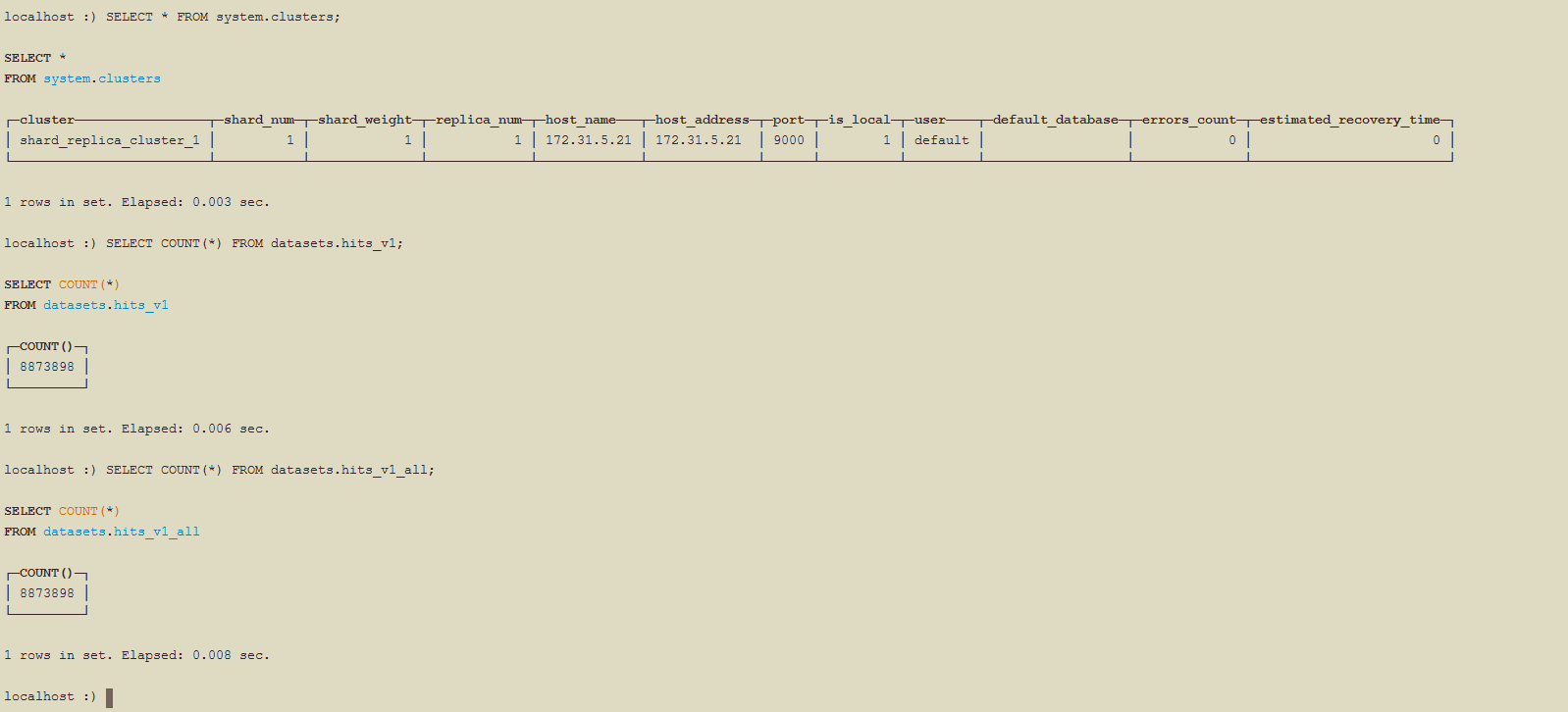

在5.21节点执行下面语句进行备份。

clickhouse-client -m --query "ALTER TABLE datasets.hits_v1 FETCH PARTITION 201403 FROM '/clickhouse/tables/datasets/01-02/hits_v1'"

执行结束后可以看到/hits_v1/detached/目录下已经有分片2的数据。

clickhouse-client -m --query "ALTER TABLE datasets.hits_v1 ATTACH PARTITION 201403"

clickhouse-client -m --query "SELECT COUNT(*) FROM datasets.hits_v1"

可以看到数据已经迁移成功。

接着修改配置文件,下线5.23-24节点。备份5.21节点的metrika.xml配置文件,然后依次修改配置文件。

[root@localhost /]# cp /etc/clickhouse-server/metrika.xml /etc/clickhouse-server/metrika.xml.bak

[root@localhost /]# vim /etc/clickhouse-server/metrika.xml

从集群配置中移除分片2的配置,修改成如下配置。

<clickhouse_remote_servers>

<shard_replica_cluster_1>

<shard>

<weight>1</weight>

<internal_replication>true</internal_replication>

<replica>

<host>172.31.5.21</host>

<port>9000</port>

</replica>

</shard>

</shard_replica_cluster_1>

</clickhouse_remote_servers>

修改完成后重启5.21,关闭5.23-24节点

# 重启节点

service clickhouse-server restart

# 关闭节点

service clickhouse-server stop

登录客户端查看集群,可以发现集群只有1个节点,同时数据量正确。

三 扩容

3.1 增加副本

增加副本节点,仅需要修改新节点配置,然后执行建表语句,语句执行后会从ZooKeeper中回去主节点信息,然后同步数据。但是这样主节点没有副本节点信息,执行查询语句时只会选择主节点,而副本节点查询语句可以在主节点和副本节点间随机选择,建议主节点同样修改配置,使得主节点副本节点配置保持一致。

以shard_replica_cluster_1集群分片1增加副本,分片1没有副本,新增5.22节点。

修改节点的metrika.xml配置文件。

[root@localhost /]# vim /etc/clickhouse-server/metrika.xml

从集群配置中移除分片2的配置,修改成如下配置。

<clickhouse_remote_servers>

<shard_replica_cluster_1>

<shard>

<weight>1</weight>

<internal_replication>true</internal_replication>

<replica>

<host>172.31.5.21</host>

<port>9000</port>

</replica>

<replica>

<host>172.31.5.22</host>

<port>9000</port>

</replica>

</shard>

</shard_replica_cluster_1>

</clickhouse_remote_servers>

修改完成后启动5.22节点

# 启动节点

service clickhouse-server start

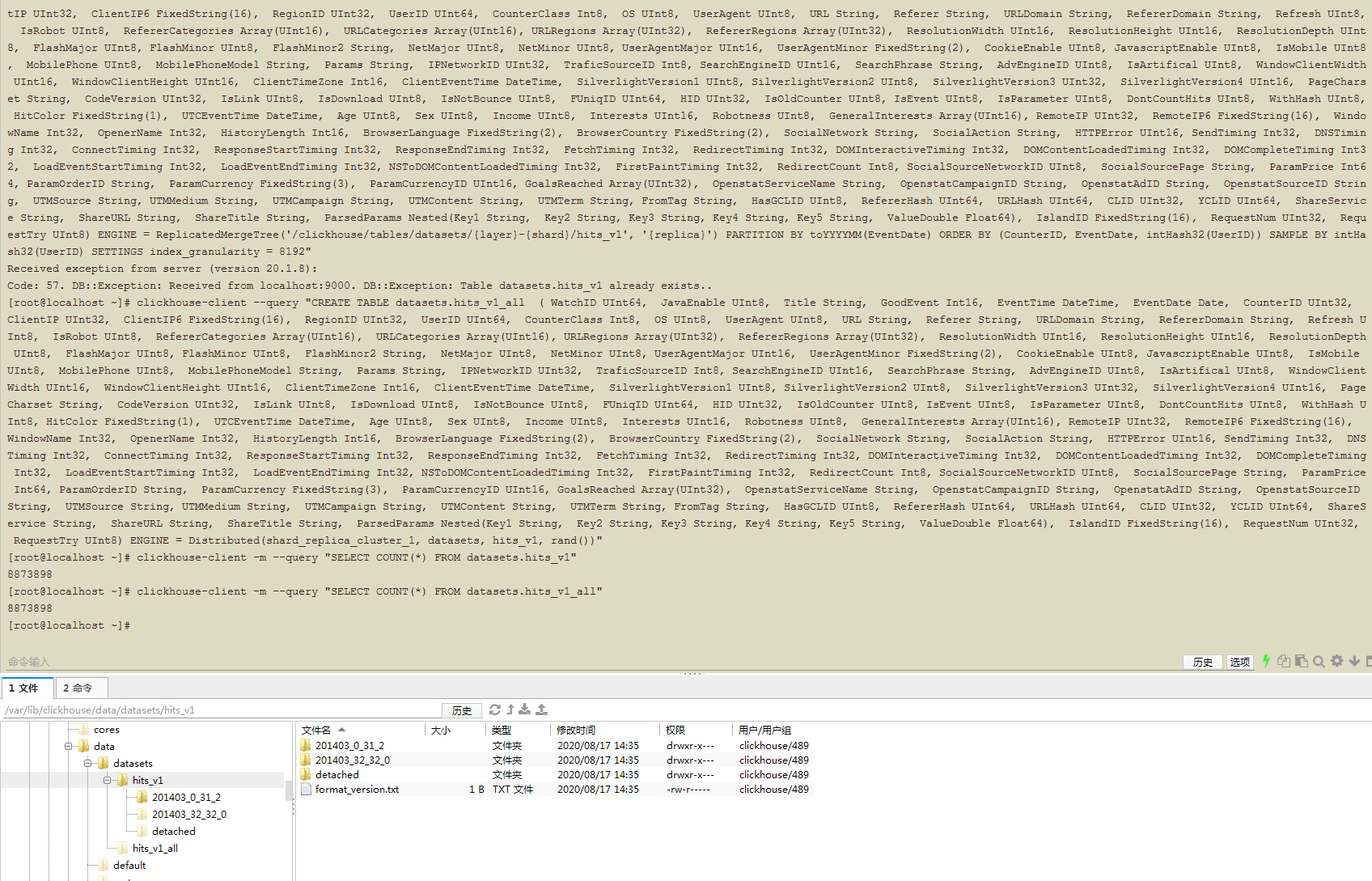

在5.22节点执行建表语句,并查询结果

clickhouse-client --query "CREATE TABLE datasets.hits_v1 ( WatchID UInt64, JavaEnable UInt8, Title String, GoodEvent Int16, EventTime DateTime, EventDate Date, CounterID UInt32, ClientIP UInt32, ClientIP6 FixedString(16), RegionID UInt32, UserID UInt64, CounterClass Int8, OS UInt8, UserAgent UInt8, URL String, Referer String, URLDomain String, RefererDomain String, Refresh UInt8, IsRobot UInt8, RefererCategories Array(UInt16), URLCategories Array(UInt16), URLRegions Array(UInt32), RefererRegions Array(UInt32), ResolutionWidth UInt16, ResolutionHeight UInt16, ResolutionDepth UInt8, FlashMajor UInt8, FlashMinor UInt8, FlashMinor2 String, NetMajor UInt8, NetMinor UInt8, UserAgentMajor UInt16, UserAgentMinor FixedString(2), CookieEnable UInt8, JavascriptEnable UInt8, IsMobile UInt8, MobilePhone UInt8, MobilePhoneModel String, Params String, IPNetworkID UInt32, TraficSourceID Int8, SearchEngineID UInt16, SearchPhrase String, AdvEngineID UInt8, IsArtifical UInt8, WindowClientWidth UInt16, WindowClientHeight UInt16, ClientTimeZone Int16, ClientEventTime DateTime, SilverlightVersion1 UInt8, SilverlightVersion2 UInt8, SilverlightVersion3 UInt32, SilverlightVersion4 UInt16, PageCharset String, CodeVersion UInt32, IsLink UInt8, IsDownload UInt8, IsNotBounce UInt8, FUniqID UInt64, HID UInt32, IsOldCounter UInt8, IsEvent UInt8, IsParameter UInt8, DontCountHits UInt8, WithHash UInt8, HitColor FixedString(1), UTCEventTime DateTime, Age UInt8, Sex UInt8, Income UInt8, Interests UInt16, Robotness UInt8, GeneralInterests Array(UInt16), RemoteIP UInt32, RemoteIP6 FixedString(16), WindowName Int32, OpenerName Int32, HistoryLength Int16, BrowserLanguage FixedString(2), BrowserCountry FixedString(2), SocialNetwork String, SocialAction String, HTTPError UInt16, SendTiming Int32, DNSTiming Int32, ConnectTiming Int32, ResponseStartTiming Int32, ResponseEndTiming Int32, FetchTiming Int32, RedirectTiming Int32, DOMInteractiveTiming Int32, DOMContentLoadedTiming Int32, DOMCompleteTiming Int32, LoadEventStartTiming Int32, LoadEventEndTiming Int32, NSToDOMContentLoadedTiming Int32, FirstPaintTiming Int32, RedirectCount Int8, SocialSourceNetworkID UInt8, SocialSourcePage String, ParamPrice Int64, ParamOrderID String, ParamCurrency FixedString(3), ParamCurrencyID UInt16, GoalsReached Array(UInt32), OpenstatServiceName String, OpenstatCampaignID String, OpenstatAdID String, OpenstatSourceID String, UTMSource String, UTMMedium String, UTMCampaign String, UTMContent String, UTMTerm String, FromTag String, HasGCLID UInt8, RefererHash UInt64, URLHash UInt64, CLID UInt32, YCLID UInt64, ShareService String, ShareURL String, ShareTitle String, ParsedParams Nested(Key1 String, Key2 String, Key3 String, Key4 String, Key5 String, ValueDouble Float64), IslandID FixedString(16), RequestNum UInt32, RequestTry UInt8) ENGINE = ReplicatedMergeTree('/clickhouse/tables/datasets/{layer}-{shard}/hits_v1', '{replica}') PARTITION BY toYYYYMM(EventDate) ORDER BY (CounterID, EventDate, intHash32(UserID)) SAMPLE BY intHash32(UserID) SETTINGS index_granularity = 8192"

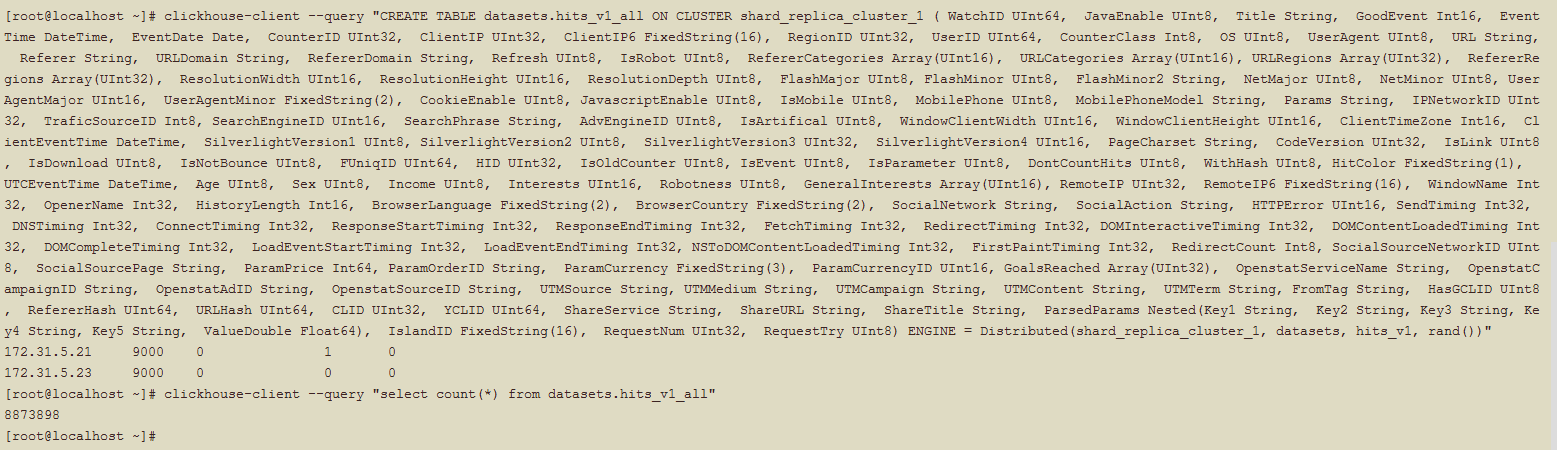

clickhouse-client --query "CREATE TABLE datasets.hits_v1_all ( WatchID UInt64, JavaEnable UInt8, Title String, GoodEvent Int16, EventTime DateTime, EventDate Date, CounterID UInt32, ClientIP UInt32, ClientIP6 FixedString(16), RegionID UInt32, UserID UInt64, CounterClass Int8, OS UInt8, UserAgent UInt8, URL String, Referer String, URLDomain String, RefererDomain String, Refresh UInt8, IsRobot UInt8, RefererCategories Array(UInt16), URLCategories Array(UInt16), URLRegions Array(UInt32), RefererRegions Array(UInt32), ResolutionWidth UInt16, ResolutionHeight UInt16, ResolutionDepth UInt8, FlashMajor UInt8, FlashMinor UInt8, FlashMinor2 String, NetMajor UInt8, NetMinor UInt8, UserAgentMajor UInt16, UserAgentMinor FixedString(2), CookieEnable UInt8, JavascriptEnable UInt8, IsMobile UInt8, MobilePhone UInt8, MobilePhoneModel String, Params String, IPNetworkID UInt32, TraficSourceID Int8, SearchEngineID UInt16, SearchPhrase String, AdvEngineID UInt8, IsArtifical UInt8, WindowClientWidth UInt16, WindowClientHeight UInt16, ClientTimeZone Int16, ClientEventTime DateTime, SilverlightVersion1 UInt8, SilverlightVersion2 UInt8, SilverlightVersion3 UInt32, SilverlightVersion4 UInt16, PageCharset String, CodeVersion UInt32, IsLink UInt8, IsDownload UInt8, IsNotBounce UInt8, FUniqID UInt64, HID UInt32, IsOldCounter UInt8, IsEvent UInt8, IsParameter UInt8, DontCountHits UInt8, WithHash UInt8, HitColor FixedString(1), UTCEventTime DateTime, Age UInt8, Sex UInt8, Income UInt8, Interests UInt16, Robotness UInt8, GeneralInterests Array(UInt16), RemoteIP UInt32, RemoteIP6 FixedString(16), WindowName Int32, OpenerName Int32, HistoryLength Int16, BrowserLanguage FixedString(2), BrowserCountry FixedString(2), SocialNetwork String, SocialAction String, HTTPError UInt16, SendTiming Int32, DNSTiming Int32, ConnectTiming Int32, ResponseStartTiming Int32, ResponseEndTiming Int32, FetchTiming Int32, RedirectTiming Int32, DOMInteractiveTiming Int32, DOMContentLoadedTiming Int32, DOMCompleteTiming Int32, LoadEventStartTiming Int32, LoadEventEndTiming Int32, NSToDOMContentLoadedTiming Int32, FirstPaintTiming Int32, RedirectCount Int8, SocialSourceNetworkID UInt8, SocialSourcePage String, ParamPrice Int64, ParamOrderID String, ParamCurrency FixedString(3), ParamCurrencyID UInt16, GoalsReached Array(UInt32), OpenstatServiceName String, OpenstatCampaignID String, OpenstatAdID String, OpenstatSourceID String, UTMSource String, UTMMedium String, UTMCampaign String, UTMContent String, UTMTerm String, FromTag String, HasGCLID UInt8, RefererHash UInt64, URLHash UInt64, CLID UInt32, YCLID UInt64, ShareService String, ShareURL String, ShareTitle String, ParsedParams Nested(Key1 String, Key2 String, Key3 String, Key4 String, Key5 String, ValueDouble Float64), IslandID FixedString(16), RequestNum UInt32, RequestTry UInt8) ENGINE = Distributed(shard_replica_cluster_1, datasets, hits_v1, rand())"

clickhouse-client -m --query "SELECT COUNT(*) FROM datasets.hits_v1"

clickhouse-client -m --query "SELECT COUNT(*) FROM datasets.hits_v1_all"

可以看到5.22节点中节点数据量正确,与5.21相同。

3.2 增加分片

1.迁移数据

增加分片节点,集群的分片数将增加,旧表与新表的结构将不一致,因此增加新分片时,需要先增加一个新的集群,然后将旧集群中的数据迁移至新集群,然后删除旧集群的数据与集群配置信息。

以shard_replica_cluster_1集群增加分片2为例,集群有1分片1副本,增加分片2,需要增加5.23节点。

修改5.21-23节点的metrika.xml配置文件。

[root@localhost /]# vim /etc/clickhouse-server/metrika.xml

从集群配置中新增shard_replica_cluster_2集群配置,修改成如下配置。

<clickhouse_remote_servers>

<shard_replica_cluster_1>

<shard>

<weight>1</weight>

<internal_replication>true</internal_replication>

<replica>

<host>172.31.5.21</host>

<port>9000</port>

</replica>

<replica>

<host>172.31.5.22</host>

<port>9000</port>

</replica>

</shard>

</shard_replica_cluster_1>

<shard_replica_cluster_2>

<shard>

<weight>1</weight>

<internal_replication>true</internal_replication>

<replica>

<host>172.31.5.21</host>

<port>9000</port>

</replica>

<replica>

<host>172.31.5.22</host>

<port>9000</port>

</replica>

</shard>

<shard>

<weight>1</weight>

<internal_replication>true</internal_replication>

<replica>

<host>172.31.5.23</host>

<port>9000</port>

</replica>

</shard>

</shard_replica_cluster_2>

</clickhouse_remote_servers>

重启5.21-23节点。

创建新集群的表。表结构与原来相同,表名增加后缀_new,在shard_replica_cluster_2执行。

clickhouse-client --query "CREATE DATABASE IF NOT EXISTS datasets ON CLUSTER shard_replica_cluster_2"

clickhouse-client --query "CREATE TABLE datasets.hits_v1_new ON CLUSTER shard_replica_cluster_2 ( WatchID UInt64, JavaEnable UInt8, ...) ENGINE = ReplicatedMergeTree('/clickhouse/tables/datasets/{layer}-{shard}/hits_v1_new', '{replica}') PARTITION BY toYYYYMM(EventDate) ORDER BY (CounterID, EventDate, intHash32(UserID)) SAMPLE BY intHash32(UserID) SETTINGS index_granularity = 8192"

clickhouse-client --query "CREATE TABLE datasets.hits_v1_new_all ON CLUSTER shard_replica_cluster_2 ( WatchID UInt64, JavaEnable UInt8, ...) ENGINE = Distributed(shard_replica_cluster_2, datasets, hits_v1_new, rand())"

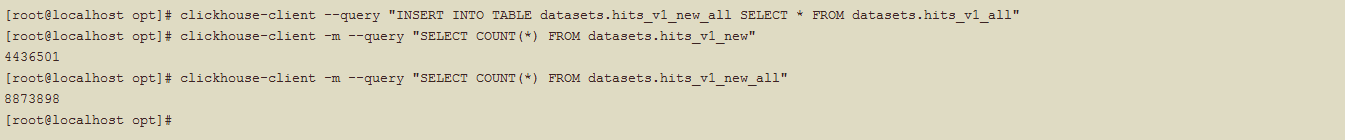

迁移数据,从hits_v1_all到hits_v1_new_all

clickhouse-client --query "INSERT INTO TABLE datasets.hits_v1_new_all SELECT * FROM datasets.hits_v1_all"

clickhouse-client -m --query "SELECT COUNT(*) FROM datasets.hits_v1_new"

clickhouse-client -m --query "SELECT COUNT(*) FROM datasets.hits_v1_new_all"

迁移完成后删除旧数据,并rename新表

迁移完成后删除旧数据,并rename新表

clickhouse-client -m --query "DROP TABLE datasets.hits_v1_all ON CLUSTER shard_replica_cluster_1"

clickhouse-client -m --query "DROP TABLE datasets.hits_v1 ON CLUSTER shard_replica_cluster_1"

clickhouse-client -m --query "RENAME TABLE datasets.hits_v1_new TO datasets.hits_v1, datasets.hits_v1_new_all TO datasets.hits_v1_all"

修改5.21-23节点的metrika.xml配置文件,移除shard_replica_cluster_1集群配置。

[root@localhost /]# vim /etc/clickhouse-server/metrika.xml

2.不迁移数据

增加分片节点,也可以不迁移数据。增加新分片后,在分片上刷新建表语句,然后重建分布式表。这种方法新数据会均衡的分布,但是历史数据仅在旧分片上存在,新分片上没有,查询历史数据会导致旧分片所在节点压力较大。

以shard_replica_cluster_1集群增加分片2为例,集群有1分片0副本,增加分片2,需要增加5.23节点。

修改5.21,23节点的metrika.xml配置文件。

[root@localhost /]# vim /etc/clickhouse-server/metrika.xml

从集群配置中新增shard_replica_cluster_2集群配置,修改成如下配置。

<clickhouse_remote_servers>

<shard_replica_cluster_1>

<shard>

<weight>1</weight>

<internal_replication>true</internal_replication>

<replica>

<host>172.31.5.21</host>

<port>9000</port>

</replica>

</shard>

<shard>

<weight>1</weight>

<internal_replication>true</internal_replication>

<replica>

<host>172.31.5.23</host>

<port>9000</port>

</replica>

</shard>

</shard_replica_cluster_1>

</clickhouse_remote_servers>

重启5.21、23节点。

在分片2上刷新建表语句,然后重建分布式表。

# 5.21

clickhouse-client --query "DROP TABLE datasets.hits_v1_all"

# 5.23

clickhouse-client --query "CREATE TABLE datasets.hits_v1 ( WatchID UInt64, JavaEnable UInt8, ...) ENGINE = ReplicatedMergeTree('/clickhouse/tables/datasets/{layer}-{shard}/hits_v1', '{replica}') PARTITION BY toYYYYMM(EventDate) ORDER BY (CounterID, EventDate, intHash32(UserID)) SAMPLE BY intHash32(UserID) SETTINGS index_granularity = 8192"

clickhouse-client --query "CREATE TABLE datasets.hits_v1_all ON CLUSTER shard_replica_cluster_1 ( WatchID UInt64, JavaEnable UInt8, ...) ENGINE = Distributed(shard_replica_cluster_1, datasets, hits_v1, rand())"

「如果这篇文章对你有用,请支持一下哦」

如果这篇文章对你有用,请支持一下哦

使用微信扫描二维码完成支付