TOC

一 背景介绍

1.1 背景

基线测试环境同事发现一个问题,有一个复制表的mutaion操作状态一直显示未完成。

1.2 复制表mutation操作执行过程

mutation操作时异步的,在提交mutation命令后,ClickHouse服务端会直接返回结果。然后提交至ZooKeeper /clickhouse/tables/database/layer-shard/tablename/mutations路径下,所有ClickHouse节点会监听该目录,有新命令时读取并执行。

1.3 排查思路

经查看该表是复制表,二分片一副本。

查看ClickHouse服务端错误日志clickhouse-server.err.log没有发现异常,查看服务器日志clickhouse-server.log同样没有问题,整个集群状态也是正常的。

整个表除了不能执行mutation操作外,写入和查询都是正常的。mutation操作为ALTER TABLE tablename DELETE WHERE 1=1;。

排查到这里毫无思路,准备先解决问题,让环境恢复正常。

思考了一下解决思路,直接删掉分区。

先查看哪些分区在作妖,发现只有2个分区,20200712和20200713。

SELECT partition FROM system.parts WHERE database = 'RISK' AND table = 'FUNDIN' GROUP BY partition;

drop分区

ALTER TABLE RISK.FUNDIN DROP PARTITION '201403';

drop分区完成后,mutation随之完成。本以为这个问题就这样隐藏掉了,但是看日志柳暗花明,发现了这样一行日志。

2020.11.02 10:43:53.881102 [ 51 ] {} <Debug> RISK.FUNDIN: There is no part 20200712_0_262_4_355 in ZooKeeper, it was only in filesystem

ZK中没有这个分区,只有本地系统有,瞬间明白这是由于ClickHouse服务端分区元数据和ZooKeeper端不一致导致,但是正常情况两边肯定是一致的,大概率是人为因素。

咨询同事发现,大概7月份给测试环境ClickHouse集群上安装Zabbix监控,由于出了一些意外,使用服务器快照恢复了ClickHouse集群,但是ZooKeeper在其他机器上,未恢复ZooKeeper所在服务器快照,问题到此明了,人为骚操作导致。

二 验证

在分析原因后,理论上能够说的通,但是实际是否真是这样,还需要进行验证,因此我以官方的datasets.hit_v1数据集进行验证。

2.1 验证思路

首先准备一个一分片一副本的集群,创建数据库、表,写入数据,表按月分区,只有一个分区201403,数据8873898条,然后对ClickHouse所在虚拟机进行快照备份。

备份完成后删除分区,此时ZooKeeper中没有分区信息,然后根据虚拟机快照恢复。

恢复后分区信息出现不一致,然后在分片节点使用mutation操作,查看mutation是否能够执行成功。

2.2 准备验证环境

localhost :) CREATE DATABASE IF NOT EXISTS datasets ON CLUSTER replica_cluster_1;

CREATE DATABASE IF NOT EXISTS datasets ON CLUSTER replica_cluster_1

┌─host────────┬─port─┬─status─┬─error─┬─num_hosts_remaining─┬─num_hosts_active─┐

│ 172.31.5.22 │ 9000 │ 0 │ │ 1 │ 0 │

│ 172.31.5.21 │ 9000 │ 0 │ │ 0 │ 0 │

└─────────────┴──────┴────────┴───────┴─────────────────────┴──────────────────┘

2 rows in set. Elapsed: 0.128 sec.

localhost :) CREATE TABLE datasets.hits_v1 ON CLUSTER replica_cluster_1 (`WatchID` UInt64, `JavaEnable` UInt8, `Title` String, `GoodEvent` Int16, `EventTime` DateTime, `EventDate` Date, `CounterID` UInt32, `ClientIP` UInt32, `ClientIP6` FixedString(16), `RegionID` UInt32, `UserID` UInt64, `CounterClass` Int8, `OS` UInt8, `UserAgent` UInt8, `URL` String, `Referer` String, `URLDomain` String, `RefererDomain` String, `Refresh` UInt8, `IsRobot` UInt8, `RefererCategories` Array(UInt16), `URLCategories` Array(UInt16), `URLRegions` Array(UInt32), `RefererRegions` Array(UInt32), `ResolutionWidth` UInt16, `ResolutionHeight` UInt16, `ResolutionDepth` UInt8, `FlashMajor` UInt8, `FlashMinor` UInt8, `FlashMinor2` String, `NetMajor` UInt8, `NetMinor` UInt8, `UserAgentMajor` UInt16, `UserAgentMinor` FixedString(2), `CookieEnable` UInt8, `JavascriptEnable` UInt8, `IsMobile` UInt8, `MobilePhone` UInt8, `MobilePhoneModel` String, `Params` String, `IPNetworkID` UInt32, `TraficSourceID` Int8, `SearchEngineID` UInt16, `SearchPhrase` String, `AdvEngineID` UInt8, `IsArtifical` UInt8, `WindowClientWidth` UInt16, `WindowClientHeight` UInt16, `ClientTimeZone` Int16, `ClientEventTime` DateTime, `SilverlightVersion1` UInt8, `SilverlightVersion2` UInt8, `SilverlightVersion3` UInt32, `SilverlightVersion4` UInt16, `PageCharset` String, `CodeVersion` UInt32, `IsLink` UInt8, `IsDownload` UInt8, `IsNotBounce` UInt8, `FUniqID` UInt64, `HID` UInt32, `IsOldCounter` UInt8, `IsEvent` UInt8, `IsParameter` UInt8, `DontCountHits` UInt8, `WithHash` UInt8, `HitColor` FixedString(1), `UTCEventTime` DateTime, `Age` UInt8, `Sex` UInt8, `Income` UInt8, `Interests` UInt16, `Robotness` UInt8, `GeneralInterests` Array(UInt16), `RemoteIP` UInt32, `RemoteIP6` FixedString(16), `WindowName` Int32, `OpenerName` Int32, `HistoryLength` Int16, `BrowserLanguage` FixedString(2), `BrowserCountry` FixedString(2), `SocialNetwork` String, `SocialAction` String, `HTTPError` UInt16, `SendTiming` Int32, `DNSTiming` Int32, `ConnectTiming` Int32, `ResponseStartTiming` Int32, `ResponseEndTiming` Int32, `FetchTiming` Int32, `RedirectTiming` Int32, `DOMInteractiveTiming` Int32, `DOMContentLoadedTiming` Int32, `DOMCompleteTiming` Int32, `LoadEventStartTiming` Int32, `LoadEventEndTiming` Int32, `NSToDOMContentLoadedTiming` Int32, `FirstPaintTiming` Int32, `RedirectCount` Int8, `SocialSourceNetworkID` UInt8, `SocialSourcePage` String, `ParamPrice` Int64, `ParamOrderID` String, `ParamCurrency` FixedString(3), `ParamCurrencyID` UInt16, `GoalsReached` Array(UInt32), `OpenstatServiceName` String, `OpenstatCampaignID` String, `OpenstatAdID` String, `OpenstatSourceID` String, `UTMSource` String, `UTMMedium` String, `UTMCampaign` String, `UTMContent` String, `UTMTerm` String, `FromTag` String, `HasGCLID` UInt8, `RefererHash` UInt64, `URLHash` UInt64, `CLID` UInt32, `YCLID` UInt64, `ShareService` String, `ShareURL` String, `ShareTitle` String, `ParsedParams.Key1` Array(String), `ParsedParams.Key2` Array(String), `ParsedParams.Key3` Array(String), `ParsedParams.Key4` Array(String), `ParsedParams.Key5` Array(String), `ParsedParams.ValueDouble` Array(Float64), `IslandID` FixedString(16), `RequestNum` UInt32, `RequestTry` UInt8) ENGINE = ReplicatedMergeTree('/clickhouse/tables/datasets/{layer}-{shard}/hits_v1', '{replica}') PARTITION BY toYYYYMM(EventDate) ORDER BY (CounterID, EventDate, intHash32(UserID)) SAMPLE BY intHash32(UserID) SETTINGS index_granularity = 8192;

CREATE TABLE datasets.hits_v1 ON CLUSTER replica_cluster_1

(

`WatchID` UInt64,

`JavaEnable` UInt8,

`Title` String,

`GoodEvent` Int16,

`EventTime` DateTime,

`EventDate` Date,

`CounterID` UInt32,

`ClientIP` UInt32,

`ClientIP6` FixedString(16),

`RegionID` UInt32,

`UserID` UInt64,

`CounterClass` Int8,

`OS` UInt8,

`UserAgent` UInt8,

`URL` String,

`Referer` String,

`URLDomain` String,

`RefererDomain` String,

`Refresh` UInt8,

`IsRobot` UInt8,

`RefererCategories` Array(UInt16),

`URLCategories` Array(UInt16),

`URLRegions` Array(UInt32),

`RefererRegions` Array(UInt32),

`ResolutionWidth` UInt16,

`ResolutionHeight` UInt16,

`ResolutionDepth` UInt8,

`FlashMajor` UInt8,

`FlashMinor` UInt8,

`FlashMinor2` String,

`NetMajor` UInt8,

`NetMinor` UInt8,

`UserAgentMajor` UInt16,

`UserAgentMinor` FixedString(2),

`CookieEnable` UInt8,

`JavascriptEnable` UInt8,

`IsMobile` UInt8,

`MobilePhone` UInt8,

`MobilePhoneModel` String,

`Params` String,

`IPNetworkID` UInt32,

`TraficSourceID` Int8,

`SearchEngineID` UInt16,

`SearchPhrase` String,

`AdvEngineID` UInt8,

`IsArtifical` UInt8,

`WindowClientWidth` UInt16,

`WindowClientHeight` UInt16,

`ClientTimeZone` Int16,

`ClientEventTime` DateTime,

`SilverlightVersion1` UInt8,

`SilverlightVersion2` UInt8,

`SilverlightVersion3` UInt32,

`SilverlightVersion4` UInt16,

`PageCharset` String,

`CodeVersion` UInt32,

`IsLink` UInt8,

`IsDownload` UInt8,

`IsNotBounce` UInt8,

`FUniqID` UInt64,

`HID` UInt32,

`IsOldCounter` UInt8,

`IsEvent` UInt8,

`IsParameter` UInt8,

`DontCountHits` UInt8,

`WithHash` UInt8,

`HitColor` FixedString(1),

`UTCEventTime` DateTime,

`Age` UInt8,

`Sex` UInt8,

`Income` UInt8,

`Interests` UInt16,

`Robotness` UInt8,

`GeneralInterests` Array(UInt16),

`RemoteIP` UInt32,

`RemoteIP6` FixedString(16),

`WindowName` Int32,

`OpenerName` Int32,

`HistoryLength` Int16,

`BrowserLanguage` FixedString(2),

`BrowserCountry` FixedString(2),

`SocialNetwork` String,

`SocialAction` String,

`HTTPError` UInt16,

`SendTiming` Int32,

`DNSTiming` Int32,

`ConnectTiming` Int32,

`ResponseStartTiming` Int32,

`ResponseEndTiming` Int32,

`FetchTiming` Int32,

`RedirectTiming` Int32,

`DOMInteractiveTiming` Int32,

`DOMContentLoadedTiming` Int32,

`DOMCompleteTiming` Int32,

`LoadEventStartTiming` Int32,

`LoadEventEndTiming` Int32,

`NSToDOMContentLoadedTiming` Int32,

`FirstPaintTiming` Int32,

`RedirectCount` Int8,

`SocialSourceNetworkID` UInt8,

`SocialSourcePage` String,

`ParamPrice` Int64,

`ParamOrderID` String,

`ParamCurrency` FixedString(3),

`ParamCurrencyID` UInt16,

`GoalsReached` Array(UInt32),

`OpenstatServiceName` String,

`OpenstatCampaignID` String,

`OpenstatAdID` String,

`OpenstatSourceID` String,

`UTMSource` String,

`UTMMedium` String,

`UTMCampaign` String,

`UTMContent` String,

`UTMTerm` String,

`FromTag` String,

`HasGCLID` UInt8,

`RefererHash` UInt64,

`URLHash` UInt64,

`CLID` UInt32,

`YCLID` UInt64,

`ShareService` String,

`ShareURL` String,

`ShareTitle` String,

`ParsedParams.Key1` Array(String),

`ParsedParams.Key2` Array(String),

`ParsedParams.Key3` Array(String),

`ParsedParams.Key4` Array(String),

`ParsedParams.Key5` Array(String),

`ParsedParams.ValueDouble` Array(Float64),

`IslandID` FixedString(16),

`RequestNum` UInt32,

`RequestTry` UInt8

)

ENGINE = ReplicatedMergeTree('/clickhouse/tables/datasets/{layer}-{shard}/hits_v1', '{replica}')

PARTITION BY toYYYYMM(EventDate)

ORDER BY (CounterID, EventDate, intHash32(UserID))

SAMPLE BY intHash32(UserID)

SETTINGS index_granularity = 8192

┌─host────────┬─port─┬─status─┬─error─┬─num_hosts_remaining─┬─num_hosts_active─┐

│ 172.31.5.22 │ 9000 │ 0 │ │ 1 │ 0 │

│ 172.31.5.21 │ 9000 │ 0 │ │ 0 │ 0 │

└─────────────┴──────┴────────┴───────┴─────────────────────┴──────────────────┘

2 rows in set. Elapsed: 0.127 sec.

写入数据

cat hits_v1.tsv | clickhouse-client --query "INSERT INTO datasets.hits_v1 FORMAT TSV" --max_insert_block_size=100000

# optionally you can optimize table

clickhouse-client --query "OPTIMIZE TABLE datasets.hits_v1 FINAL"

localhost :) SELECT COUNT(*) FROM datasets.hits_v1;

SELECT COUNT(*)

FROM datasets.hits_v1

┌─COUNT()─┐

│ 8873898 │

└─────────┘

1 rows in set. Elapsed: 0.006 sec.

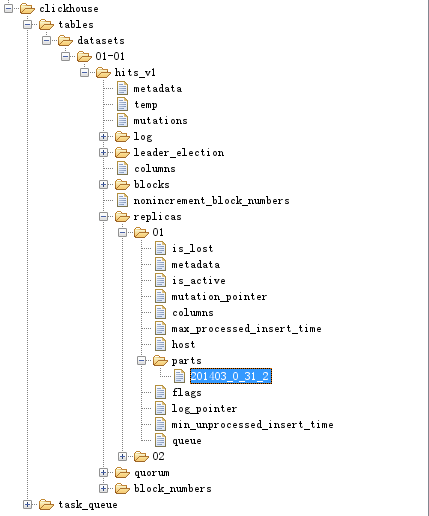

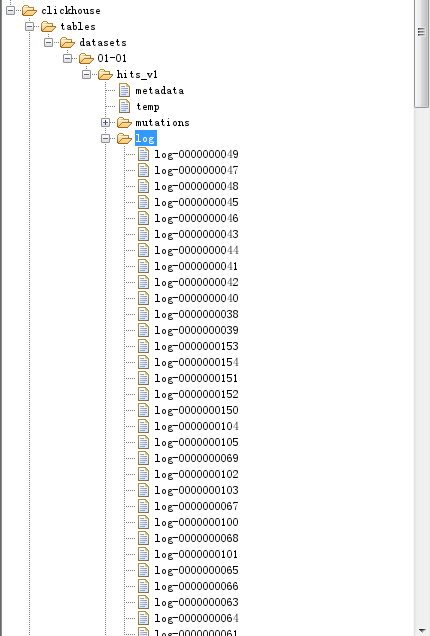

zk内分区信息

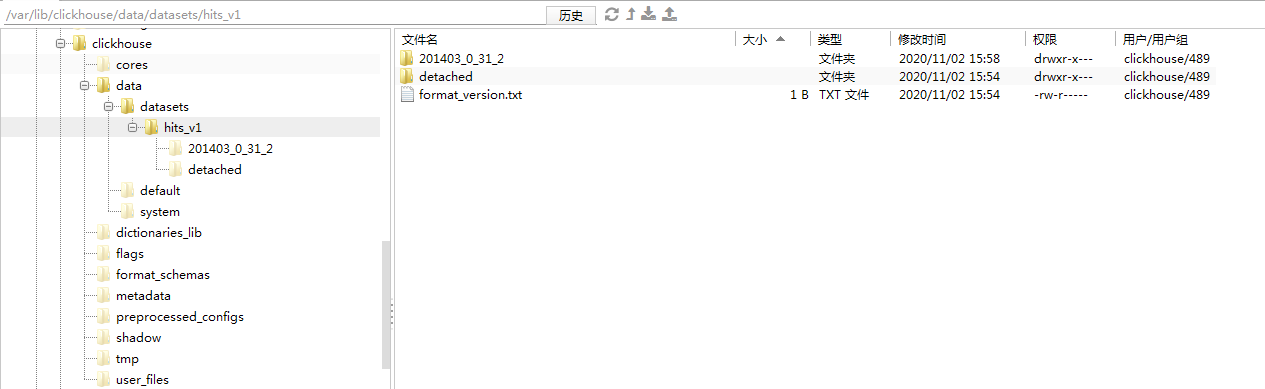

物理存储路径,同样只有一个分区201403

虚拟机备份快照

2.3 测试

首先情况数据,然后是否删除成功。

localhost :) ALTER TABLE datasets.hits_v1 DELETE WHERE 1=1;

ALTER TABLE datasets.hits_v1

DELETE WHERE 1 = 1

Ok.

0 rows in set. Elapsed: 0.027 sec.

localhost :) SELECT * FROM system.mutations WHERE is_done != 1;\G

SELECT *

FROM system.mutations

WHERE is_done != 1

Ok.

0 rows in set. Elapsed: 0.005 sec.

localhost :) SELECT COUNT(*) FROM datasets.hits_v1;

SELECT COUNT(*)

FROM datasets.hits_v1

┌─COUNT()─┐

│ 0 │

└─────────┘

1 rows in set. Elapsed: 0.007 sec.

可以发现删除操作执行成功,数据量为0。

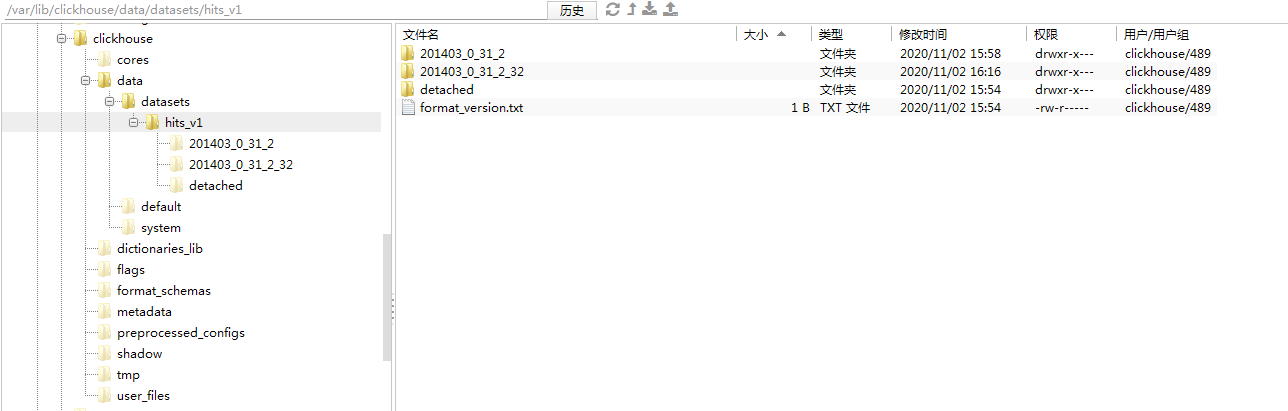

物理存储路径多了一个分区的数据片段。

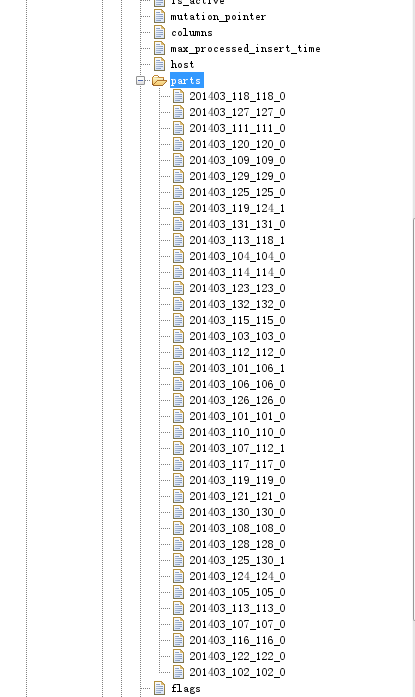

然后恢复快照,执行mutation操作。可以看到已经恢复快照备份前的分区。

localhost :) ALTER TABLE datasets.hits_v1 DELETE WHERE 1=1;

ALTER TABLE datasets.hits_v1

DELETE WHERE 1 = 1

Ok.

0 rows in set. Elapsed: 0.067 sec.

localhost :) SELECT * FROM system.mutations WHERE is_done != 1;\G

SELECT *

FROM system.mutations

WHERE is_done != 1

Ok.

0 rows in set. Elapsed: 0.030 sec.

but没有出现想象中的场景,查看日志

2020.11.02 16:04:18.103450 [ 31 ] {} <Trace> datasets.hits_v1: Found 18 old parts to remove.

2020.11.02 16:04:18.103579 [ 31 ] {} <Debug> datasets.hits_v1: Removing 18 old parts from ZooKeeper

2020.11.02 16:04:18.104756 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_12_12_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.104799 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_13_13_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.104813 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_14_14_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.104826 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_15_15_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.104868 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_16_16_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.105030 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_17_17_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.105057 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_18_18_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.105071 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_19_19_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.105280 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_20_20_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.105333 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_21_21_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.105395 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_22_22_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.105772 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_23_23_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.105807 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_24_24_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.105998 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_25_25_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.106023 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_26_26_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.106049 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_27_27_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.106251 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_28_28_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.106280 [ 31 ] {} <Debug> datasets.hits_v1: There is no part 201403_29_29_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:04:18.106311 [ 31 ] {} <Debug> datasets.hits_v1: Removed 18 old parts from ZooKeeper. Removing them from filesystem.

2020.11.02 16:04:18.487063 [ 31 ] {} <Debug> datasets.hits_v1: Removed 18 old parts

发现是可以根据ZooKeeper中的信息恢复,猜测是日志。多来几次几次写入操作,一定要确保日志日志被覆盖掉。

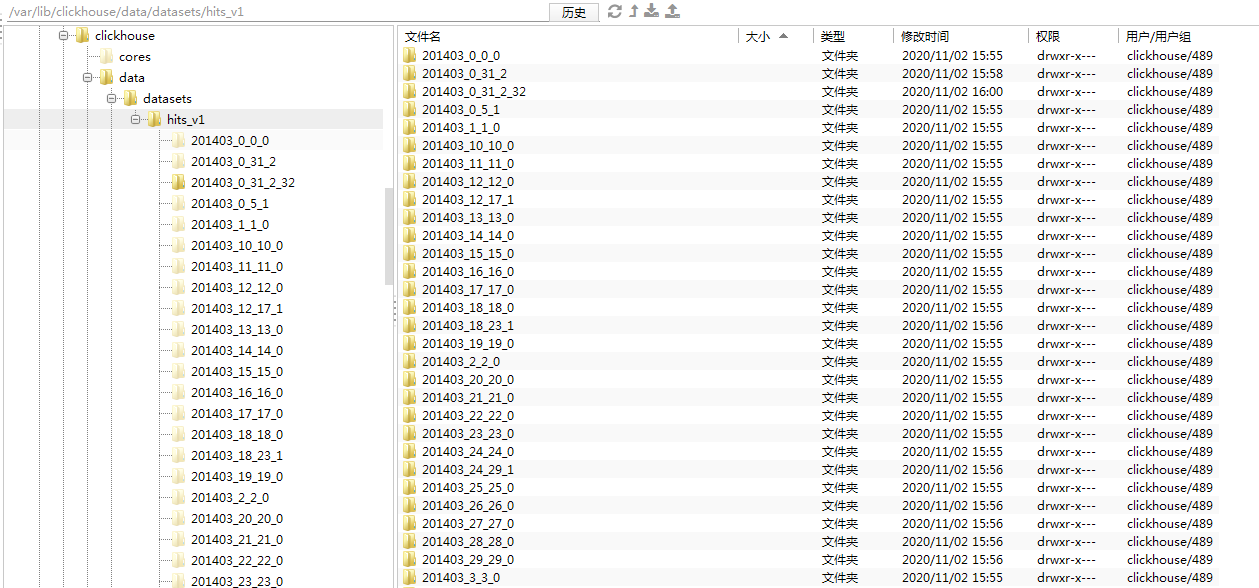

多次删除,写入操作后。日志如下图:

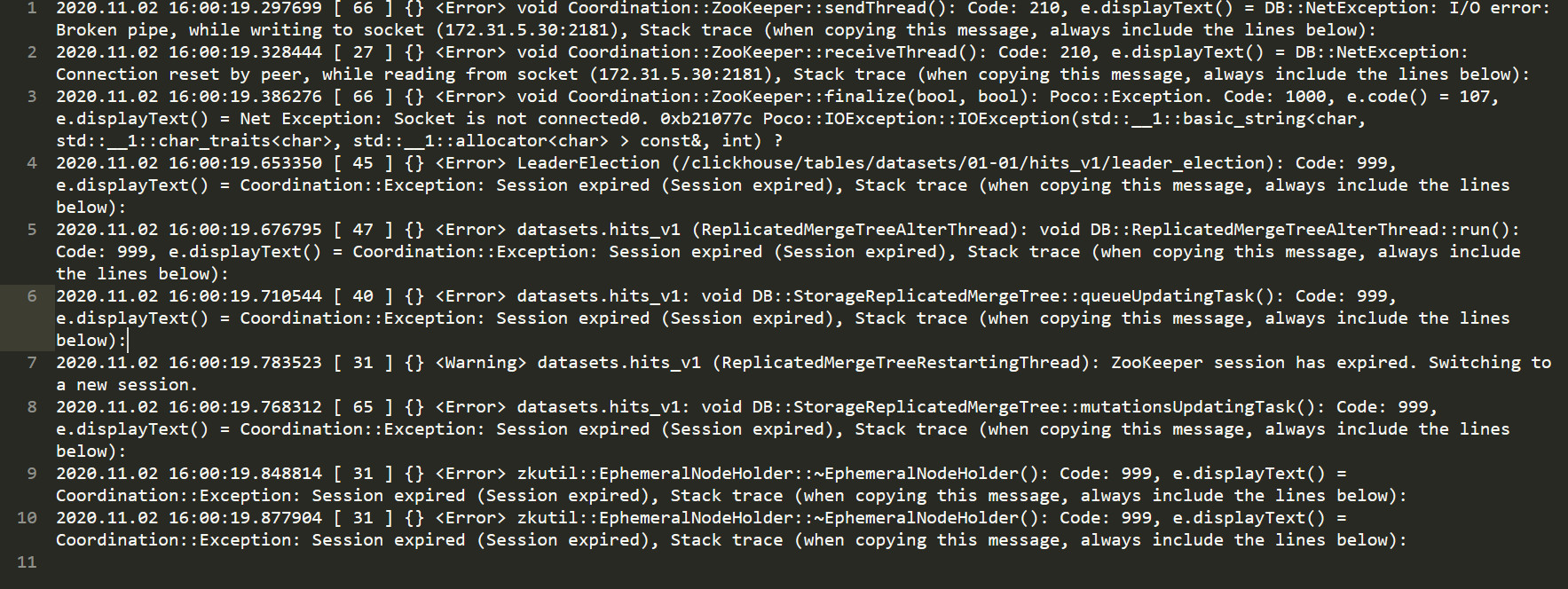

然后再次恢复快照,可以发现场景复现成功。

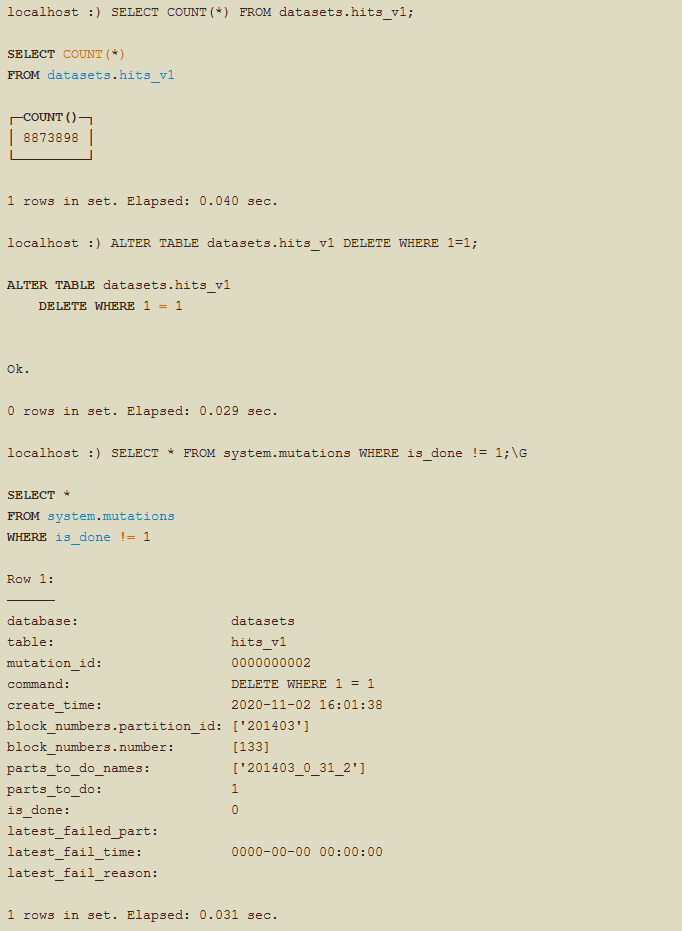

先查看err日志,err日志正常,里面的错误信息是由于恢复快照的zk连接断开,没有关于mutation的信息。

再次查看服务器日志,发现了创建的mutation 0000000002 后台执行时Won't mutate that part and will check it,无法执行,问题完美复现。

2020.11.02 16:01:37.993583 [ 81 ] {f6786bab-1882-48ff-a9da-29a00c1302b7} <Debug> executeQuery: (from [::1]:41772) ALTER TABLE datasets.hits_v1 DELETE WHERE 1 = 1

2020.11.02 16:01:38.019167 [ 81 ] {f6786bab-1882-48ff-a9da-29a00c1302b7} <Trace> datasets.hits_v1: Created mutation with ID 0000000002

2020.11.02 16:01:38.019926 [ 47 ] {} <Information> datasets.hits_v1 (ReplicatedMergeTreeQueue): Loading 1 mutation entries: 0000000002 - 0000000002

2020.11.02 16:01:38.020482 [ 81 ] {f6786bab-1882-48ff-a9da-29a00c1302b7} <Debug> MemoryTracker: Peak memory usage (for query): 0.00 B.

2020.11.02 16:01:38.020630 [ 81 ] {} <Debug> MemoryTracker: Peak memory usage (total): 0.00 B.

2020.11.02 16:01:38.020704 [ 81 ] {} <Information> TCPHandler: Processed in 0.027 sec.

2020.11.02 16:01:41.546458 [ 81 ] {6e7b3da2-a96c-4eb5-bbb8-c12e5cced76a} <Debug> executeQuery: (from [::1]:41772) SELECT * FROM system.mutations WHERE is_done != 1

2020.11.02 16:01:41.552372 [ 81 ] {6e7b3da2-a96c-4eb5-bbb8-c12e5cced76a} <Trace> InterpreterSelectQuery: FetchColumns -> Complete

2020.11.02 16:01:41.552733 [ 81 ] {6e7b3da2-a96c-4eb5-bbb8-c12e5cced76a} <Debug> executeQuery: Query pipeline:

2020.11.02 16:01:41.575776 [ 81 ] {6e7b3da2-a96c-4eb5-bbb8-c12e5cced76a} <Information> executeQuery: Read 3 rows, 505.00 B in 0.029 sec., 103 rows/sec., 16.93 KiB/sec.

2020.11.02 16:01:41.575884 [ 81 ] {6e7b3da2-a96c-4eb5-bbb8-c12e5cced76a} <Debug> MemoryTracker: Peak memory usage (for query): 3.52 KiB.

2020.11.02 16:01:41.576097 [ 81 ] {} <Debug> MemoryTracker: Peak memory usage (total): 3.52 KiB.

2020.11.02 16:01:41.576232 [ 81 ] {} <Information> TCPHandler: Processed in 0.030 sec.

2020.11.02 16:03:53.150822 [ 38 ] {} <Warning> datasets.hits_v1: Part 201403_0_31_2 (that was selected for mutation) with age 303 seconds exists locally but not in ZooKeeper. Won't mutate that part and will check it.

2020.11.02 16:03:53.151912 [ 30 ] {} <Warning> datasets.hits_v1 (ReplicatedMergeTreePartCheckThread): Checking part 201403_0_31_2

2020.11.02 16:03:53.159423 [ 30 ] {} <Error> datasets.hits_v1 (ReplicatedMergeTreePartCheckThread): Unexpected part 201403_0_31_2 in filesystem. Removing.

2020.11.02 16:03:58.255413 [ 47 ] {} <Trace> datasets.hits_v1: Found 18 old parts to remove.

2020.11.02 16:03:58.255508 [ 47 ] {} <Debug> datasets.hits_v1: Removing 18 old parts from ZooKeeper

2020.11.02 16:03:58.257116 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_0_0_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257159 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_1_1_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257192 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_2_2_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257209 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_3_3_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257234 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_4_4_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257268 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_5_5_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257283 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_6_6_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257298 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_7_7_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257318 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_8_8_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257334 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_9_9_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257359 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_10_10_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257388 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_11_11_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257403 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_12_12_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257619 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_13_13_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257652 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_14_14_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257722 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_15_15_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257746 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_16_16_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257895 [ 47 ] {} <Debug> datasets.hits_v1: There is no part 201403_17_17_0 in ZooKeeper, it was only in filesystem

2020.11.02 16:03:58.257933 [ 47 ] {} <Debug> datasets.hits_v1: Removed 18 old parts from ZooKeeper. Removing them from filesystem.

2020.11.02 16:03:58.610362 [ 47 ] {} <Debug> datasets.hits_v1: Removed 18 old parts

2020.11.02 16:03:58.633618 [ 47 ] {} <Trace> datasets.hits_v1 (ReplicatedMergeTreeCleanupThread): Execution took 378 ms.

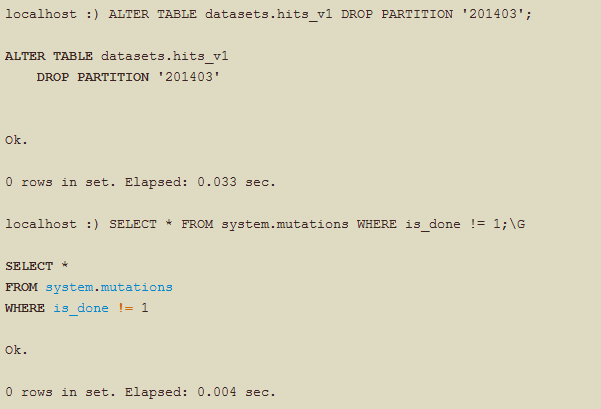

2.4 恢复

这种情况下只能删除分区。

删除分区后,再次查看之前mutation操作执行结果发现执行完成。

后台日志如下:

2020.11.02 16:58:53.408070 [ 81 ] {b81d334a-b769-430b-84e5-b56e0d8b2c42} <Debug> executeQuery: (from [::1]:41772) ALTER TABLE datasets.hits_v1 DROP PARTITION '201403'

2020.11.02 16:58:53.429911 [ 81 ] {b81d334a-b769-430b-84e5-b56e0d8b2c42} <Trace> datasets.hits_v1: Deleted 32 deduplication block IDs in partition ID 201403

2020.11.02 16:58:53.430049 [ 81 ] {b81d334a-b769-430b-84e5-b56e0d8b2c42} <Debug> datasets.hits_v1: Disabled merges covered by range 201403_0_133_999999999_133

2020.11.02 16:58:53.431097 [ 81 ] {b81d334a-b769-430b-84e5-b56e0d8b2c42} <Debug> datasets.hits_v1: Waiting for 01 to pull log-0000000155 to queue

2020.11.02 16:58:53.432434 [ 40 ] {} <Debug> datasets.hits_v1 (ReplicatedMergeTreeQueue): Pulling 1 entries to queue: log-0000000155 - log-0000000155

2020.11.02 16:58:53.433746 [ 40 ] {} <Debug> datasets.hits_v1 (ReplicatedMergeTreeQueue): Pulled 1 entries to queue.

2020.11.02 16:58:53.433897 [ 16 ] {} <Debug> datasets.hits_v1 (ReplicatedMergeTreeQueue): Removed 0 entries from queue. Waiting for 0 entries that are currently executing.

2020.11.02 16:58:53.433959 [ 16 ] {} <Debug> datasets.hits_v1: Removing parts.

2020.11.02 16:58:53.433977 [ 16 ] {} <Information> datasets.hits_v1: Removed 0 parts inside 201403_0_133_999999999_133.

2020.11.02 16:58:53.434147 [ 81 ] {b81d334a-b769-430b-84e5-b56e0d8b2c42} <Debug> datasets.hits_v1: Looking for node corresponding to log-0000000155 in 01 queue

2020.11.02 16:58:53.434876 [ 81 ] {b81d334a-b769-430b-84e5-b56e0d8b2c42} <Debug> datasets.hits_v1: No corresponding node found. Assuming it has been already processed. Found 0 nodes.

2020.11.02 16:58:53.434902 [ 48 ] {} <Trace> datasets.hits_v1 (ReplicatedMergeTreeQueue): Will check if mutation 0000000002 is done

2020.11.02 16:58:53.436001 [ 81 ] {b81d334a-b769-430b-84e5-b56e0d8b2c42} <Debug> MemoryTracker: Peak memory usage (for query): 0.00 B.

2020.11.02 16:58:53.436162 [ 81 ] {} <Debug> MemoryTracker: Peak memory usage (total): 0.00 B.

2020.11.02 16:58:53.436244 [ 81 ] {} <Information> TCPHandler: Processed in 0.028 sec.

2020.11.02 16:58:53.438141 [ 48 ] {} <Trace> datasets.hits_v1 (ReplicatedMergeTreeQueue): Mutation 0000000002 is done

可以看到线程48 检查mutation 0000000002,并处理完成。

「如果这篇文章对你有用,请支持一下哦」

如果这篇文章对你有用,请支持一下哦

使用微信扫描二维码完成支付